Why AI Data Security, Sovereignty, and Compliance Need to be Non-Negotiable

In late July, Fast Company released an exclusive story that revealed that Google was actively indexing ChatGPT conversations. What this meant is that what users thought were private conversations with the tool were actually indexed publicly, creating a major security risk and inadvertently directly exposing customer data.

While OpenAI’s CIO, Dane Stuckey, has argued that this was labeled when users clicked the share button on their conversations, it re-introduces important questions about AI data security, data ownership, and how leading AI enterprises are managing their customer data.

Over the past two years, there has been an enormous push to develop, refine, and launch regulatory compliance frameworks that oversee AI data security, user data management, and the secure development of AI tools. What began with the EU’s AI Act has now evolved, with new laws like California’s AI Transparency Act paving a way for the sustainable and ethical use of AI solutions.

Especially in light of the rapidly developing compliance systems in place around artificial intelligence, demonstrating AI data security, sovereignty, and compliance must be non-negotiable for enterprises across the world.

AI Data Exposure Is a Security Threat and a User Risk

A central pillar of the conversation around the OpenAI leak stems not from the events but rather from the fact that data leakage, privacy issues, and opaque user data management strategies are seemingly all too common from AI providers.

Only months ago, an independent study funded by the Social Sciences Research Council determined that OpenAI was training on copyrighted data. The New York Times has launched a similar lawsuit against OpenAI for the unauthorized use of their data, adding another name to the list of parties that believe that their data is being used without permission by these AI tools.

For consumers, the lack of transparency around how AI companies use and store their data is extremely worrying. Frontier models are currently black boxes, with the rapid development of technology blazing ahead while legislation plays catch-up.

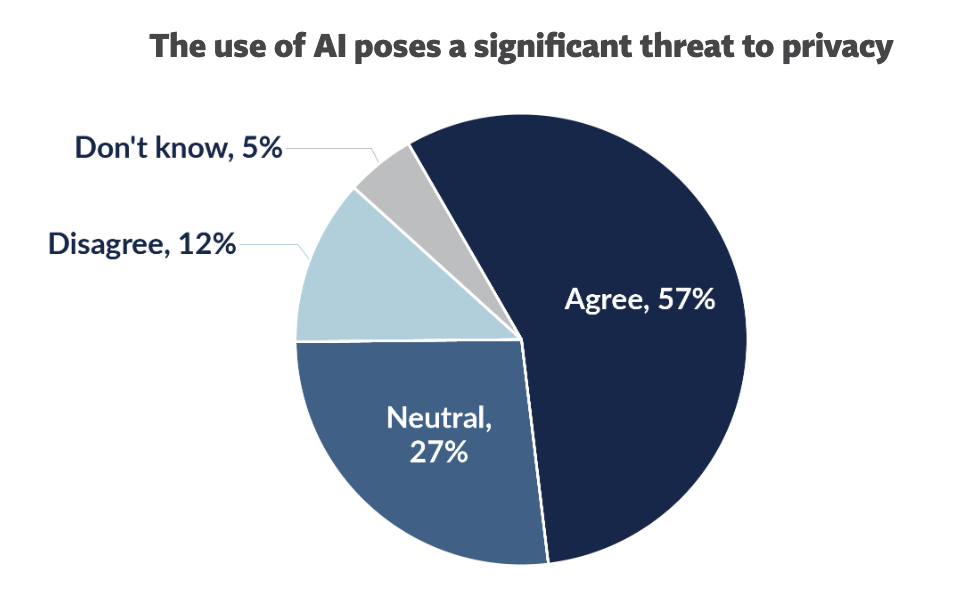

Over 57% of consumers agree that artificial intelligence poses a significant threat to their privacy, with a further 81% stating that they are worried AI enterprises misuse their data in ways that were not originally intended. These statistics directly reflect the widespread mistrust of AI tools, especially those like OpenAI that are extremely private about their data usage.

Source: The use of AI and its risks to privacy: consumer sentiment data.

Questions AI Businesses Must Answer to Ensure Data Security

Artificial intelligence businesses must put AI data security first, providing full transparency into how they use customer data, whether or not it is stored, and especially whether or not it becomes part of training data sets.

As regulatory bodies continue to legislate around AI, it’s important that AI enterprises stay ahead of the curve, deliver easy-to-understand content that explains how they use customer data, and continue to build trust with customers.

To help get the ball rolling, here are three important questions that every AI enterprise should be able to answer:

- Can you guarantee that all voice data processing occurs within our specified geographic region without any external API calls?

- Do you maintain complete audit logs of every system that touches our customer voice data, including timestamps and processing locations?

- In the event of litigation requiring data preservation (like the OpenAI-NYT case where OpenAI must preserve all user data indefinitely), how would you handle court orders affecting third-party providers versus your own infrastructure?

- Can you modify the AI model's behavior within 24 hours if we identify inappropriate responses, without depending on external providers?

- What deterministic, rule-based systems verify that AI responses comply with regulatory requirements before delivery?

- Can you provide evidence that customer data is never used to train models?

- How do you ensure our training data and conversation patterns remain our intellectual property and don't improve models used by others?

These questions strike at the core of data security in AI businesses, clarifying the storage and usage of data while demonstrating alignment with central compliance frameworks.

Bland Puts User Security First

At Bland, we’re devoted to transparency. We never share data with frontier model providers or train our models on customer data.

As an AI company that is self-hosted end-to-end and built on open-source foundations, we offer organizations and individuals alike complete control over their data.

We believe that trust in AI starts with complete transparency, ownership, and verifiable audibility. With strict data boundaries, precise data usage policies, and a drive to put your data security first, Bland is committed to producing ethical AI products.