Model Context Protocol (MCP): Standardizing AI Interoperability

The Model Context Protocol (MCP) is the leading open-source framework for integrating AI systems with external tools, systems, and data sources. MCP allows AI systems to move beyond isolated, closed environments and integrate into the wider tech ecosystem – whether that’s pulling information from GitHub or interacting with external databases.

While connecting AI platforms to external systems isn’t new, doing so through a unified, standardized protocol with broad compatibility and prebuilt data connectors represents a major leap forward in ease of development and product scalability. As stated by Anthropic's Alex Albert, Head of Claude Relations:

“AI systems will maintain context as they move between different tools and datasets, replacing today’s fragmented integrations with a more sustainable architecture.”

But how exactly does this protocol transform real-world use cases of AI, and how are businesses harnessing MCP to build smarter, more efficient AI systems? In this article, we’ll answer these questions and explain how Bland uses MCP to deliver world-class AI voice products.

Why Does MCP Matter for Business Outcomes?

Enterprises that want to transform internal AI systems into powerful, adaptable customer-facing applications can use the Model Context Protocol to streamline external connections. The MCP standardizes how LLMs connect to and interact with these external tools, enabling intelligent, dynamic systems that can reason, respond to queries, take actions, and adapt to a customer’s needs in real time.

Here is why MCP matters for enterprise use cases of AI:

- Enhances Interoperability: Instead of siloed data pools and static models, the MCP transforms AI systems into actionable tools that can interact with other systems, trigger new workflows, and access live data streams. An interconnected, flexible AI system ensures that tools can communicate flawlessly to better serve the end user.

- Streamlines Integration Pathways: As an open-source system, the MCP provides a highly accessible pathway to connect LLMs to any external tool, without having to write custom code and build data pipelines to connect the two sites. This enables faster development, increases AI utility, and reduces integration overhead.

- Improves AI Governance: MCPs and the guidelines that the Pathways language offers allow teams to customize how their models access and engage with certain information. These built-in guiderails reduce risk, improve compliance, and ensure your business keeps sensitive information private.

Together, these benefits make the MCP a foundational infrastructural tool for building intelligent, adaptable, and customer-serving AI systems.

What Is the Model Context Protocol: Core Components

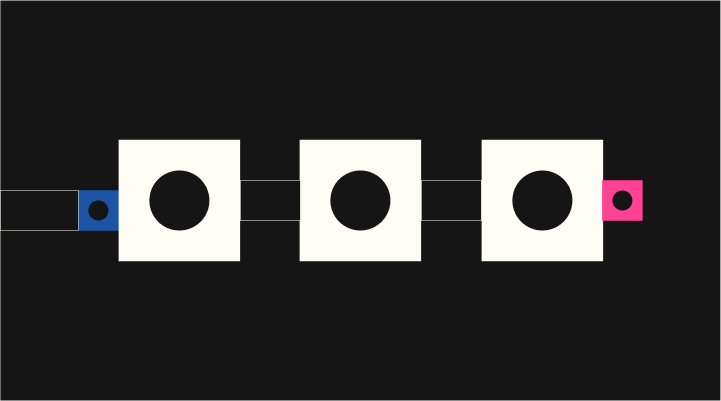

At its core, the Model Context Protocol is a tool that allows an LLM to request information from systems, query them, and pull data to complete a task. When a user interacts with an LLM, four core components work together to handle the request.

MCPs work by using these four components:

- MCP Host: The MCP host is the primary environment where the MCP is implemented. In most scenarios, this is typically the AI platform or interface where a customer interacts with the system and begins their query. The host also acts as the overall coordinator between external resources, the client, and the model.

- MCP Client: The MCP operates inside the host and translates an incoming query into a structured request that the machine can then process and pass on to the MCP server. It also receives a completed query from the server and transfers it back to the host.

- MCP Server: The MCP server is an external API, custom webhook, CRM system, database, or general system that receives the incoming request, locates the data, and returns a result back to the MCP client.

- Transport Layer: MCP uses two main transport mechanisms, each of which uses JSON-RPC 2.0 as their standard format. Standard input/output (stdio) is best for local message and resource transmission, while server-sent events (SSE) are best for accessing remote resources for real-time data sharing.

While both MCPs and RAG (Retrieval-Augmented Generation) supply LLMs with external data, they do so in different ways. RAG enhances model outputs by injecting information from relevant documents retrieved at the time of a query, whereas MCP enables real-time interaction with external systems, tools, or APIs - which facilitate the execution of additional actions or real-time data fetching.

How Bland Connects To External Sources To Bring AI Voice to Millions of Customers

Bland offers ultra-realistic AI voice agents that don’t just respond to queries - they get things done. Through Pathways, businesses can structure the logic, context, flow, and data that Bland’s AI agent will consult at every stage of the conversation.

Additionally, Bland is also available through Mintlify’s new Model Context Protocol integration, which makes it even easier to get help with Bland AI right from your favorite AI tools. This MCP integration provides real-time access to Bland’s full documentation, meaning you can ask AI assistants about useful API endpoints, key features, and more.

Whether you’re building with Bland or looking for simplified cross-platform support, get started by adding https://docs.bland.ai/mcp to your MCP settings to get started.